Welcome back to the sixth part of our Prompt Engineering series! At Tilburg.ai, we believe that the best way to learn is by working together, and combining the insights. Here’s a little secret: language models are great at that too.

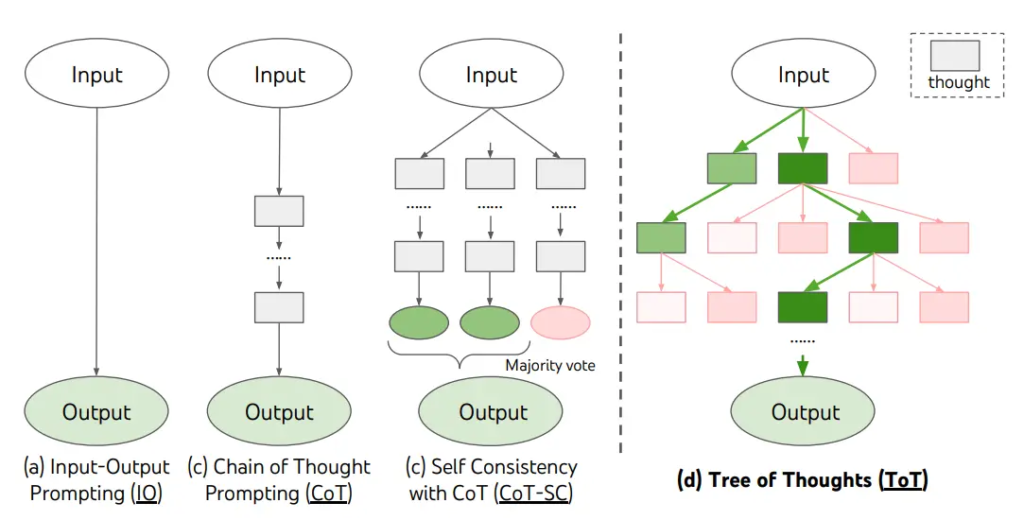

Three of Thoughts (ToT) prompting is a technique that builds on the Chain-of-Thought (CoT) prompting method, with the aim of strengthening the reasoning capabilities of large language models (LLMs). Where CoT encourages models to verbalize their thought process step by step to arrive at a correct answer, ToT goes a step further by allowing LLMs to explore multiple paths of reasoning simultaneously. This is inspired by how humans approach complex problems, through trial and error and by considering different possibilities. The idea is that an LLM, just like a human, can recognize and correct errors by trying different lines of thought, and thus arrive at a better solution.

Just as people consider different strategies when solving a complex problem, ToT explores different “thoughts” or intermediate steps. Take the prompt below as an example of how it works.

Prompt:

- Role: You are a coach who guides three virtual experts:

An Analytical Expert (specialized in logic and fact checking).

A Creative Expert (specialized in outside-the-box thinking).

A Pragmatic Expert (oriented towards feasible solutions).

- Assignment: Answer the following question:

- Each of you briefly and clearly notes your line of thought ("thought 1").

- You compare each other's lines of thought and adjust them if there is a clear error.

- Then each of you notes a second intermediate step ("thought 2"), based on the insights from the first round.

- Then come to a summary answer together.

Question: [Fill in your question or problem here]

The higher-level framework of Tree-of-Thought (ToT):

The ToT framework is a modular system, consisting of several components that work together to improve the reasoning capabilities of LLMs. It can be seen as a tree of possible thoughts, where each node represents an intermediate step in the reasoning process

The main components of Tree of Thoughts Prompting

Thought Decomposition

The problem is broken down into smaller, manageable steps, the “thoughts”. The nature of these thoughts can vary depending on the problem. A thought should be “small” enough for the model to generate various possibilities, but also “large” enough to evaluate progress towards the solution.

For example, in a math problem, a thought might be an intermediate equation, while in a creative writing task, a thought might be a short writing plan

Step 1 (Thought Decomposition)

Break down the following question into up to 3 smaller sub-questions or sub-problems. Label each sub-problem as “Thought A”, “Thought B” and “Thought C”.

Thought Generator

This is the component responsible for generating different potential thoughts from each state in the reasoning process. There are two common strategies for generating thoughts: Independent sampling or Sequential proposition. With Independent sampling thoughts are generated independently of each other, often using a Chain-of-Thought prompt, while with Sequential proposition the thoughts are presented one at a time, with each successive thought building on the previous one.

Step 2 (Thought Generator) For each subproblem, generate at least 2 possible solutions or approaches. Label these as “Option 1” and “Option 2”.

State Evaluator

This component evaluates the progress of the different thoughts towards the solution. The evaluation can be done independently per state (each thought is given a value), or by comparison (the most promising thought is chosen). Or as in the very first example by majority voting.

Step 3 (State Evaluator)

Evaluate per subproblem which option seems most promising. Name this as “Preferred Option”.

Search Algorithm`

This is the mechanism that navigates the “tree of thoughts” and determines which paths to explore further. Two commonly used search algorithms are: Breadth-First Search (BFS) which maintains a set of the most promising states per step and Depth-First Search (DFS) which explores the most promising state first, until a final solution is reached or the state is deemed impossible. In that case, the model regresses to a previous state.

Step 4 (Search Algorithm / Backtracking) If a preferred option turns out to be incorrect at a later time, go back to the previous step and choose an alternative option. Step 5 (Conclusion) Come to a final solution based on your preferred options or backtracked decisions. Question: [Fill in your question or problem here]

Breadth-First Search Approach

Question: [Fill in question here]

Task: Systematically search all possibilities.

- Generate 3 different solution directions in one round. Name these “Branch 1”, “Branch 2”, “Branch 3”.

- Evaluate which branch is the most promising.

- Then work out only that branch further with 3 new sub-options. Name these “Sub-branch A”, “Sub-branch B”, “Sub-branch C”.

- Choose the most promising sub-branch again.

- Finally, provide the final solution.

Depth-First Search Approach:

Question: [Fill in question here]

Assignment: Explore the most promising direction as deeply as possible, and only go back if you notice that it does not work.

- Come up with an initial solution direction (Thought 1).

- Work on this direction until you encounter a stumbling block or inconsistent outcome.

- In case of invalid partial outcome: go back one step and choose an alternative subdirection.

- Repeat this process until you have found a complete, consistent solution.

- Report the paths taken and explain why the final solution is valid.

General Best Practices

Be clear in your role allocation: Let the model know if it needs to take on different ‘experts’ or ‘roles’. Where we have focused on a single role in previous prompt strategies, such as a tutor or expert, we often miss the perspectives of other stakeholders. By explicitly naming multiple roles or interests and including them in the prompt, we can generate output that provides a more comprehensive and nuanced picture.

Break down the problem: Give explicit instructions to divide the problem into sub-problems (Thought Decomposition). LLMs inherently struggle with reasoning, and often have a tendency to come to a solution right away. By breaking down the problem into smaller, specific sub-questions, it becomes easier to analyze each piece separately and ultimately arrive at an integrated solution.

State desired intermediate steps: If you want the model to generate, test and compare options (Thought Generator + State Evaluator), say so literally. Many prompts get stuck on one possible solution. By asking the model to generate multiple options and critically evaluate them, you can make a better informed decision.

Formulate a checklist: After the conclusion, have the model summarise at a glance how it arrived at the solution and where possible pitfalls were. A checklist forces the model to check its own work and ensures transparency in the process. This makes it easier to identify any errors or gaps.

Chain-of-Thought prompting and Tree-of-Thought prompting

Chain-of-Thought (CoT) prompting and Tree-of-Thought (ToT) prompting are both techniques that aim to improve the reasoning capabilities of Large Language Models (LLMs). CoT prompts LLMs to explain their thought process step by step. The idea is that an LLM can solve a problem better if it is instructed to make explicit the intermediate steps that lead to the final conclusion. Therefore, with CoT, the LLM generates a single sequence of thoughts that lead to the answer. There is no exploring multiple paths of reasoning or backtracking if an error is discovered. on the other hand, ToTi allows LLMs to explore and self-evaluate multiple paths of reasoning. ToT is based on the idea of a tree structure, where each “thought” is an intermediate step in the problem solving process. Unlike CoT, ToT allows the LLM to go back to earlier steps in the reasoning process and explore other possibilities.

Conclusion

ToT is a powerful promt engineering technique that enables LLMs to “reason”. By exploring multiple paths, self-evaluating, and backtracking when necessary, LLMs can solve more complex problems with higher accuracy. The modularity of the ToT framework makes it flexible and adaptable to different types of problems. This structure and the way it is constructed is based on the way humans approach problems, with a focus on exploring multiple possibilities and making decisions based on feedback and evaluation

We’ve reached the end of lesson 6, having mapped out the thoughtful routes through Tree-of-Thought prompting.