Meta Prompting (MP) represent an new approach in the way we interact with generative AI, such as ChatGPT. Unlike methods like few-shot prompting, where specific examples teach the model how to respond, Meta Prompting focuses on shaping the reasoning framework the AI should follow. Instead of providing answers or examples, this method teaches the AI how to think by defining clear structures and syntax for task execution.

This shift from content to structure transforms AI interactions, making them more consistent, efficient, and adaptable. By emphasizing the organization of information over its specific details, Meta Prompting creates flexible templates that work across a wide range of tasks. Whether the goal is solving equations or crafting compelling text, this approach is equally effective in task-agnostic and task-specific applications.

What Is Meta Prompting?

Rather than instructing a large language model (LLM) with specific commands or examples (e.g., “Solve this math problem”), Meta Prompting focuses on how a problem is framed and how a solution is constructed. It introduces a structured framework that guides the model’s reasoning, enabling it to independently derive answers.

This method stands in contrast to few-shot prompting, where explicit examples are used to train the model. Instead, Meta Prompting defines principles and formats that the model applies universally, regardless of the task. The emphasis shifts from “What examples should I provide?” to “How can I create a structure that enables the model to solve tasks on its own?”

Example: Few-Shot Prompting vs. Meta Prompting

Few-Shot Prompting: This technique involves providing a fully worked-out solution, allowing the model to learn how the answer is constructed. Below, we have provided an example of a math problem with a fully worked-out solution. This approach helps the model understand the reasoning process through imitation.

A short note: While we have included the intermediate steps here, this is often not necessary. For instance, when classifying sentences as positive or negative, simply providing a few example sentences and their final classification would suffice.

Example 1:

Problem: Solve the quadratic equation 3x2 + 4x−5 = 0.

Solution:

• Step 1: Identify the coefficients: a = 3, b = 4, andc =−5.

• Step 2: Calculate the discriminant: ∆ = b2−4ac = 42−4(3)(−5) = 16 + 60 = 76.

• Step 3: Since ∆ > 0, the equation has two distinct real roots.

• Step 4: Calculate the roots using the quadratic formula: x1,2 =−b±√∆ 2a =−4±√766.

• Step 5: Simplify to find the roots: x1 =−4+√766 and x2 =−4−√766.

Final Answer: The roots of the equation 3x2 + 4x−5 = 0 are x1 =−4+√766 and x2 =−4−√766.

----

Example 2: ...

---In contrast, Meta Prompting abstracts the reasoning process into a reusable framework. Instead of solving a single problem, it provides a structured template the model can apply autonomously to any similar task. For example:

Problem: "Solve the quadratic equation ax2 + bx + c = 0 for x."

"Solution": {

"Step 1": "Identify the coefficient sa,b, and c from the equation.",

"Step 2": "Compute the discriminant using ∆ = b2−4ac.",

"Step 3": "Check if ∆ > 0,∆ = 0, or ∆ < 0 to determine the nature of the roots.",

"Step 4": "If∆ > 0, calculate the two distinct real roots using x1,2 =−b±√∆2a.",

"Step 5": "If∆ = 0, calculate the single real root using x =−b*2a .",

"Step 6": "If∆ < 0, calculate the complex roots using x1,2 =−b±i√|∆|*2a.",

"Step 7": "End the solution process by summarizing the roots of the equation."

"Final Answer": "Depending on the discriminant ∆,the final answer will be the roots of the equation, given by x1,x2.}

}Formal Foundation

In this article, let’s also take a meta-approach by not only providing an example but also explaining the foundational principles of a meta-prompt.

Meta Prompting builds on two foundational concepts from mathematics and logic: set theory and category theory. These frameworks focus on objects (such as tasks) and the relationships or transformations between them (e.g., functions).

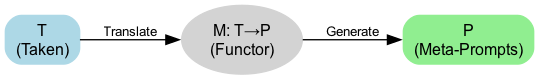

Meta Prompting specifically adopts a functorial perspective from category theory, where tasks are conceptualized as objects and prompt structures are treated as corresponding objects in seperate related category. A functor, denoted as M, maps relationships between tasks and their associated prompt structures. This mapping preserves key structures, allowin for consistency and coherence between the problem (task) and its generalized solution (prompt). By doing so, Meta Prompting creates a framework that links specific tasks to broader, reusable templates, which creates and requires a deeper understanding of relationships and scalability in problem-solving..

Connection with Set Theory and Category Theory

- Set T (Tasks): The set of all possible tasks, such as all mathematical problems you could present to the Large Language Model.

- Set P (Prompt Structures): The set of all possible prompt structures.

- Functor M:T→P: Maps each task t ∈ T to a suitable meta-prompt p ∈ P.

Formula: for every task t∈T, we have M(t)=p, where p∈P.

The functor, M, g enerates a new prompt while preserving the underlying structure of the tasks. If there is a transformation from task t1 to t2, there will be a corresponding transformation from p1 (the meta-prompt for t1) to p2 (the meta-prompt for t2). This ensures that the relationships between tasks are mirrored in the relationships between their respective meta-prompts, maintaining consistency and coherence throughout the process.

Example of a Functor M

A critical reader may have already noticed that the example we discussed earlier primarily focused on P, the meta-prompt, while the role of M, our functor, has so far been left somewhat ambiguous. Importantly, the functor M is also a prompt, designed for a language model.

This prompt starts by specifying the task at hand. As expected, it constitutes a new prompt, as the goal is to map a task, t to a new prompt, p. For instance, M can translate the task into an input prompt to improve the model’s reasoning capabilities, like we did underneath. However, as with the earlier example, the task could also involve creating a reductorial framework, a structured method for approaching the given math problem.

This process begins with the Input Prompt, which serves as the starting point, and the Objective, which defines the desired outcome of the transformation. The inclusion of Key Elements for Revision introduces a structured framework that outlines specific features to be incorporated into the revised prompt, such as multi-step reasoning, challenging conventional thinking, and fostering complex problem-solving. These elements act as requirements that our revised prompt must meet to align with the stated objective. However, these requirements are adaptable and may vary depending on the specific task at hand, allowing flexibility in how the transformation is achieved.

The Expected Outcome showcases the primary function of M: creating a revised prompt that goes beyond surface-level adjustments to deepen analytical thinking and promote a understanding of the subject. Rather than just tweaking a specific example, M embodies a general method for restructuring prompts to stimulate critical thinking, explore multiple perspectives, and synthesize ideas from various domains.

Think of M as a “bridge-builder.” It takes the starting point (the input prompt) and the desired destination (the objective) and builds a structured path between them. This path isn’t rigid, it adapts to the nature of the task and the problem-solving goals. By doing so, M isn’t just a tool for editing, it’s a model for thinking critically and systematically about how to design prompts that encourage reasoning and creativity.

Task: Prompt Revision to improve Reasoning Capabilities.

1. Input Prompt: [input prompt]

2. Objective: Revise the above input prompt to improve the critical thinking and reasoning capabilities.

3. Key Elements for Revision:

• Integrate complex problem-solving elements.

• Embed multi-step reasoning processes.

• Incorporate scenarios challenging conventional thinking.

4. Expected Outcome:

• The revised prompt ([revised prompt]) should stimulate deeper analytical thought.

• It should facilitate a comprehensive understanding of the subject matter.

• Ensure the revised prompt fosters the exploration of diverse perspectives.

• The prompt should encourage synthesis of information from various domains.This example illustrates the functor (M) in Meta Prompting by mapping a specific task—“Prompt Revision to Improve Reasoning Capabilities” onto a structured approach.

- The task itself, “Prompt Revision to Improve Reasoning Capabilities,” represents an object in the category of tasks (T)

- The prompt (with numbered steps and enumerations) is the result of the functor, which maps this task to an object in the category of structured prompts (P).

- Role of the Functor (M)

- Structuring:

The prompt is clearly organized with a goal, key elements, and an expected outcome. This enables the LLM to approach the task systematically. - Abstraction:

The prompt remains abstract, unbound to any specific topic or problem. The structure can be applied to a variety of input prompts. - Focus on how instead of what:

The emphasis is on the method of revising the input prompt (e.g., for complex problem-solving, multi-step reasoning, etc.), rather than on the specific content of the input prompt.

- Structuring:

The prompt serves as a “recipe” for revising prompts, and this “recipe” is the outcome of the functor translating an abstract task into a concrete, structured method. By specifying its structure, abstraction, and focus, the functor bridges the conceptual task space and the actionable prompt space.

Here, we used our functor to develop an example case for a first-year bachelor finance course focused on Internal Rate of Return (IRR). While the prompt could still be refined further, our goal is to highlight its potential and capabilities. It is important to steer the prompt while created in the right direction and make sure the direction are as clear and detailed as possible. You must account for all the constraints and expectations; otherwise, the language model may not fully understand your intentions. Despite this, with just a few minutes of effort, we were able to make significant progress. This demonstrates how even a relatively simple implementation of meta-prompting can produce a solid foundation for educational use cases, provided it is given adequate thought and structure.

From Meta to Meta-Meta Prompting

To illustrate this structure, consider a meta-prompt for solving mathematical problems. Here’s how it could look:

- Object (Task) Let T1 =“Solve a quadratic equation.”

- Meta Prompt M(T1): This is not a direct solution but a structured set of instructions for the task:

- “Let’s think step by step.”

- “Identify the coefficients a, b, and c.”

- “Calculate the discriminant Δ=b2−4ac, and determine how many solutions exist.”

- “Provide the final answers in LaTeX notation.”

This represents a transformation of any task T into a prompt P, equipping the model with a methodical approach to solving problems without relying on explicit examples. The LLM can autonomously follow the outlined thought process.

Introducing Meta-Meta Prompting

Beyond mapping a single task, Meta-Meta Prompting layers additional abstraction. It builds a second layer of meta-structure to address higher-level objectives.

Example: A Teaching-Oriented Meta-Meta Prompt

Let’s assume the goal is not only to solve a quadratic equation but also to generate a teaching guide to help students understand the solution process. A meta-meta prompt involves additional layers of instructions that guide the model in both solving the problem and presenting the solution in a pedagogical manner.

- Define the Base Problem and Solution: Using the earlier defined function, here’s the initial breakdown:

{"Problem": "Solve the quadratic equationa x2 + bx + c = 0 for x.",

"Solution": {

"Step 1": "Identifythe coefficients a,b, and c from the equation.",

"Step 2": "Compute the discriminant using∆ = b2−4ac.",

"Step 3": "Check if∆ > 0,∆ = 0, or∆ < 0 to determine the nature of the roots.",

"Step 4": "If ∆ > 0, calculate the two distinct real roots using x1,2 =−b±√∆2a.",

"Step 5": "If ∆ = 0, calculate the single real root using x =−b*2a .",

"Step 6": "If ∆ < 0, calculate the complex roots using x1,2 =−b±i√|∆|*2a.",

"Step 7": "End the solution process by summarizing the roots of the equation."

Final_Answer": "Depending on the discriminant ∆,the final answer will be the roots of the equation,given by x1,x2.}

}2. Generate the Teaching-Oriented Meta-Prompt

Next, we create a meta-meta prompt to guide how to teach students about solving a quadratic equation:

{"Problem": "Explain how to guide students through solving the equation"

"Solution": {

"Step 1": "Introduce the basic concepts of quadratic equations.",

"Step 2": "Help students identify the coefficients.",

"Step 3": "Show how to calculate the discriminant and explain what it tells about the solutions.",

"Step 4": "Explain the quadratic formula and demonstrate how to calculate the roots.",

"Final Answer": "Summarize the steps and the solutions obtained."}

}Outcome of the Meta-Meta Prompt

By using this meta-meta prompt, the model not only solves the quadratic equation but also provides a clear, structured teaching guide for explaining the solution process to students. The approach is multifaceted, ensuring that the model focuses on:

- Breaking down the steps for clarity: Making each step understandable for students at different learning levels.

- Addressing common misconceptions: Helping students anticipate potential mistakes and reinforcing important concepts.

- Offering teaching strategies: Encouraging interactivity and critical thinking through guided questions and explanations.

This layering of abstraction transforms the task of solving a quadratic equation into a learning experience.

Setting the above prompts as system prompts result in the following interaction.

Conclusion

Meta Prompting offers a scalable, generic, and efficient alternative to traditional prompting techniques. By focusing on structures rather than content, it frees the model from reliance on specific examples, making the prompt engineering skill even more valuable: we are teaching LLMs not only what to answer but also how to arrive at an answer. This broadens the range of applications, improves performance, and saves resources.

For universities and research, this is could be a technique to deploy models more effectively for complex tasks such as mathematics, text analysis, and education. By teaching LLMs not just what to do, but also how to think, you make them more effective and adaptable to a broader array of challenges.

Resources

Zhang, Y. (2023). Meta prompting for agi systems.