Using AI for web scraping and a free practice website sounds like music to your ears? In this article, we will explain how LLMs can collect data online. Traditionally, this task often required a lot of manual work (copy, paste), or programming languages like Python and R with various libraries to parse and extract data from websites. However, with the introduction and the development of AI, a new player has entered the market: Large Language Models (LLMs) can web scrape using human language. Sounds Exciting? Then let’s quickly start with a simple way you can use AI for web scraping, based on a recently published article in the Journal of Retailing.

For those who stick to traditional web scraping methods using Python and R, or for anyone looking to practice AI web scraping without copyright concerns, our colleagues have created a special platform for learning and practice. The website, music-to-scrape.org, provides a controlled environment where you can apply the knowledge gained from this article!

What Can You Do with AI Web Scraping?

Generative AI and Large Language Models (LLMs) offer numerous possibilities for web scraping by improving coding, data discovery, enrichment, and analysis support.

- Coding and scalability: LLMs, such as GPT models, can assist in working with HTML code, identifying relevant tags, and scheduling web scraping tasks. They can also generate initial code in various programming languages to extract specific elements of a website.

- Data discovery and enrichment: LLMs broaden the scope of web scraping by identifying diverse and relevant datasets and websites

- Example from the paper: LLMs can automate complex tasks, such as identifying retailer names in newspaper articles to build a retailer database. They can link data from different sources, match unique product IDs..

- Analysis support: LLMs help with the analysis phase by restructuring data, performing exploratory analysis, and visualizing results. They can also execute sentiment analysis and assist with other analytical tasks.

In addition, LLMs are valuable for data matching, whether aligning existing datasets or finding supplementary information by navigating the web. However, it is very improtant to verify the accuracy of these matches.

The following table provide some example propmts, illustarting how to use AI for web scaping practically.

| Area | Goal | Example Prompts |

|---|---|---|

| Coding | Identify elements in a web page | “Identify the html_tags that allow me to locate the price of products in the following web page.” |

| Suggest methods to extract elements | “Can you write code in R using Rvest that scrapes prices from the following website?” | |

| Develop code in different languages | “Below, I have some R code which scrapes prices of a website. Could you translate the code into Python so it does the exact same thing?” | |

| Debug and fix code | “My Python scraper is failing to parse dates correctly from a webpage. Can you suggest a fix?” | |

| Suggest code improvements | “Look at my code below which tries to scrape the website. Could you give me some suggestions that could improve this script?” | |

| Get a script to start a data collection | “I’d like to regularly monitor product names and prices at [insert website]. Which coding language would you recommend me to scrape the information with and could you provide code I could use as a starting point?” | |

| Data Discovery & Enrichment | Extract data from a single web page | “I need the product names and corresponding prices of this webshop. Please provide them in an Excel sheet.” |

| Identify similar data sources | “I have data on prices of sodas at Walmart in the US, can you provide me with other [relevant retailers/countries] I should inspect?” | |

| Identify additional data sources | “I have data on sodas including the EAN, can you provide me with datasets with nutritional information on EAN codes?” | |

| Analysis Support | Restructuring data to get “clean” output | “Given the following HTML, how would I extract the product name and price using Python, R, and Puppeteer?” |

| Check data for anomalies | “I have a scraper that collects data on prices from X, can you write an R function for me that verifies that all prices are in USD?” | |

| Recommending and creating data visualizations | “I have data scraped from a website [insert which data you have]. It contains [insert what your data is about], please suggest 5 ways to visualize this data.” | |

| Performing sentiment analysis | “I have [describe dataset] with reviews about products from Amazon. Could you help me perform a sentiment analysis?” |

Practical Tips AI Web Scraping

Some prompts may not function effectively with language models that lack internet access, such as ChatGPT-01. For instance, pasting a webpage URL might be ineffective, even with models that have internet-enabled capabilities. A more reliable approach is to upload a website’s HTML file, which can be obtained by saving a webpage (e.g., the homepage of Amazon.com).

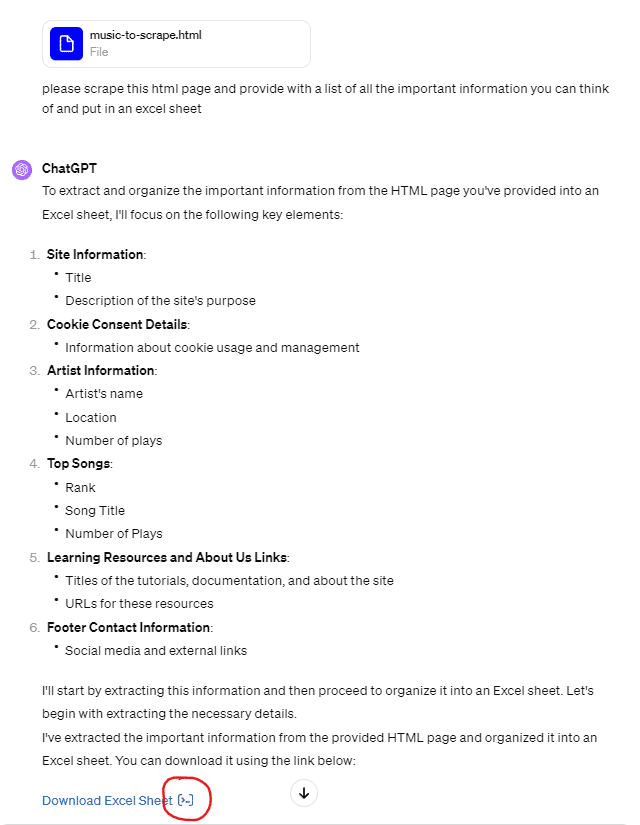

To demonstrate this in action, refer to the screenshots below. They illustrate how we applied this technique in ChatGPT using a this page from music-to-scrape.org and the data we were able to quickly extract from it.