Ever wondered how AI can streamline your web scraping process for more efficient data collection from the internet? Web scraping has become an essential method for gathering data online, supporting a variety of uses. Traditionally, this task has often needed a lot of manual work or deep knowledge of programming, using languages like Python and R with various libraries to parse and pull data from websites. However, as the field of AI continues to evolve, a new player has entered the arena: Large Language Models (LLMs). In this article, we will briefly discuss some simple ways you can use AI for web scraping based on the recently published article from the Journal of Retailing.

Web Scraping Tools Overview

Before discussing AI’s role in web scraping, let’s first outline the current tools available for data extraction. The process of web scraping can be achieved through various tools, ranging from code-based solutions to more user-friendly commercial platforms, and, more recently, AI-driven approaches. Here, we shortly explore these various types of web scraping tools, explaining their unique characteristics and applications.

Code-Based Tools

Code-based tools use programming languages like R and Python, with libraries such as rvest, RSelenium, BeautifulSoup, Scrapy, and selenium for customizable web scraping. These tools offer deep control for those with coding knowledge, suitable for detailed and long-term data projects. For more information and tutorials regarding web scraping with these tools, consider checking out Tilburg Science Hub for good and clear resources.

Non-Code-Based Commercial Tools

Platforms like Octoparse, Import.io, ParseHub, and WebHarvy provide a user-friendly, GUI-driven approach, ideal for quick setups without coding. Though costlier, they’re great for hassle-free, scalable data collection with minimal customization needs.

LLM-Based Scrapers

Emerging LLMs like ChatGPT are redefining traditional web scraping methods by allowing for natural language instructions for data extraction. This is perfect for prototyping your web scraping scripts or for one-time collections. While currently less versatile in looping and scheduling, they represent an accessible future for web scraping tasks.

Practicing your Web Scraping skills

For those eager to learn and practice traditional web scraping techniques using code-based tools such as Python and R, there’s a dedicated platform designed to practice and learn these skills. The website, music-to-scrape.org, was developed by Tilburg Science Hub specifically as a playground for users to exercise and improve their web scraping skills. This resource offers a realistic yet controlled environment, allowing you to apply and test your knowledge in extracting data from the web.

Using Generative AI for Web Scraping

The introduction of Generative AI and LLMs has opened up new ways in the area of web scraping. With AI you can now improve the process across coding, scalability, data discovery, enrichment, and even analysis. These advancements offer a new perspective on traditional web scraping methods.

Coding and Scalability

The tricky part of web scraping is changing from a simple setup to something that works well non-stop. LLMs, like GPT models, offer valuable support here. They assist in navigating complex HTML, identifying essential tags, and managing web scraping schedules efficiently. This support is key for creating advanced scraping setups and helps you start writing code in different languages to target certain website elements more accurately and faster.

Data Discovery and Enrichment

LLMs widen the scope of web scraping. They help identify a broader range of datasets and websites, encouraging exploration beyond common sources. This is especially useful for data from regions less known to the researcher. LLMs streamline the process of executing detailed queries, such as pinpointing specific retailer names in numerous articles for database compilation, and linking varied data sources, making data collection more comprehensive.

Analysis and Visualization Support

LLMs are instrumental in data restructuring and preliminary analyses, although this area has room for growth in terms of integration into the research process. Their role in simplifying the analysis phase and suggesting data visualization methods underlines their potential to improve both the efficiency and depth of research findings.

By refining web scraping from coding to analysis, Generative AI and LLMs not only make data collection easier but also improve the quality of data insights. The following table with example prompts illustrates the practical use of AI in web scraping.

| Area | Goal | Example Prompts |

|---|---|---|

| Coding | Identify elements in a web page | “Identify the html_tags that allow me to locate the price of products in the following web page.” |

| Suggest methods to extract elements | “Can you write code in R using Rvest that scrapes prices from the following website?” | |

| Develop code in different languages | “Below, I have some R code which scrapes prices of a website. Could you translate the code into Python so it does the exact same thing?” | |

| Debug and fix code | “My Python scraper is failing to parse dates correctly from a webpage. Can you suggest a fix?” | |

| Suggest code improvements | “Look at my code below which tries to scrape the website. Could you give me some suggestions that could improve this script?” | |

| Get a script to start a data collection | “I’d like to regularly monitor product names and prices at [insert website]. Which coding language would you recommend me to scrape the information with and could you provide code I could use as a starting point?” | |

| Data Discovery & Enrichment | Extract data from a single web page | “I need the product names and corresponding prices of this webshop. Please provide them in an Excel sheet.” |

| Identify similar data sources | “I have data on prices of sodas at Walmart in the US, can you provide me with other [relevant retailers/countries] I should inspect?” | |

| Identify additional data sources | “I have data on sodas including the EAN, can you provide me with datasets with nutritional information on EAN codes?” | |

| Analysis Support | Restructuring data to get “clean” output | “Given the following HTML, how would I extract the product name and price using Python, R, and Puppeteer?” |

| Check data for anomalies | “I have a scraper that collects data on prices from X, can you write an R function for me that verifies that all prices are in USD?” | |

| Recommending and creating data visualizations | “I have data scraped from a website [insert which data you have]. It contains [insert what your data is about], please suggest 5 ways to visualize this data.” | |

| Performing sentiment analysis | “I have [describe dataset] with reviews about products from Amazon. Could you help me perform a sentiment analysis?” |

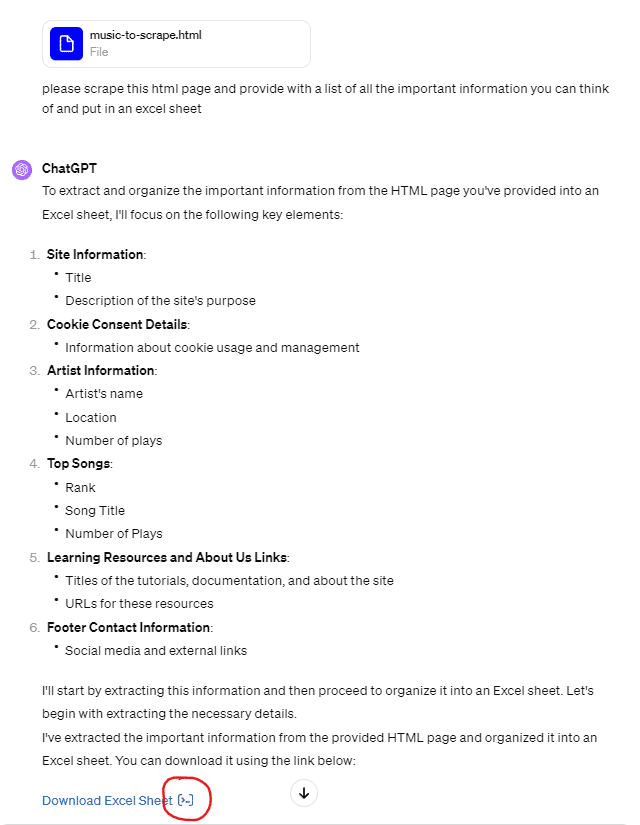

Some prompts may not function with language models lacking internet access, like ChatGPT 3.5. Using just a webpage URL might be ineffective, even with internet-enabled models. A better approach is to upload a website’s HTML file, obtained by saving a webpage (e.g., Amazon.com’s homepage). To show this in action, check out the screenshots below. They show how we used this technique inside of ChatGPT on this page from music-to-scrape.org and the data we quickly obtained from the page:

To access the complete Python code written by ChatGPT for retrieving the web-scraped data, you can click on the button highlighted in the screenshot on the left. This button will open up a screen with the code inside (see screenshot below).

Conclusion

AI has introduced a wealth of opportunities for web scraping, a trend that is expected to expand as AI technologies continue to advance. For those interested in exploring the practical applications of web scraping in more detail, the article by the Journal of Retailing offers a good analysis of web scraping techniques.