OpenAI has launched a new model called GPT-4o. As you know, we at Tilburg.ai are always enthusiastic about new toys from OpenAI. The “o” inside GPT-4o stands for “`omni`,” meaning “all” or “every.” This implies that the model should be capable of everything. While it’s not quite there yet, this model comes a step closer.

Previously, interaction with ChatGPT was text-based. Now, GPT-4o allows reasoning across audio, vision, and text in real-time. OpenAI has showcased the capabilities of its new model in various promotional videos but has decided to roll out the features gradually.

At Tilburg.ai we have already compiled an extensive article on all the new features of GPT-4o and what you can do with it. You can read that article HERE.

This article dives deeper into the GPT-4o API capabilities, which now extend beyond text to include both text and image processing, and provides templates and examples to show how it can help with your study experience.

GPT-4o: Available for All Users

The new model is a significant advantage for those without a Plus subscription. OpenAI has made the model available to everyone, although there is a limit on the number of prompts that can be sent. This limit depends on the number of users. So, now everyone can use a model that matches GPT-4 Turbo performance on text in English and code. When GPT-4o is unavailable, users will switch back to GPT-3.5.

Plus users can send up to 80 messages every 3 hours on GPT-4o and up to 40 messages every 3 hours on GPT-4. These limits might be lower during busy times to ensure everyone can use it. Also, it is important to point out that GPT-4o‘s knowledge is up to date as of October 2023.

Exciting Upgrades for API Users

API users can look forward to a significant upgrade. GPT-4o delivers the same level of intelligence as the GPT-4 Turbo but is faster and more cost-effective. What more could you ask for?

Well, it offers an additional functionality: uploading images to the API. GPT-4o can understand and explain these images. Soon, the GPT-4o API will also be able to comprehend audio, but for now, it is only capable of interpreting images. Don’t worry, we’ll explain everything as clearly as possible so that everyone is ready to dive in and use the API model.

List of API upgrades:

- Access: GPT-4o supports both text and image processing.

- Pricing: the GPT-4o API is 50% cheaper than GPT-4 Turbo API, costing $5/M input tokens and $15/M output tokens.

- Speed: GPT-4o is twice as fast as GPT-4 Turbo.

- Rate Limits: GPT-4o can handle five times more requests than GPT-4 Turbo, up to 10 million tokens per minute.

- Vision: GPT-4o performs better than GPT-4 Turbo for image-related tasks.

- Multilingual Support: GPT-4o has improved support for non-English languages compared to GPT-4 Turbo.

For a beginner’s tutorial on the OpenAI API, be sure to check out our earlier article. It’s packed with helpful tips and step-by-step explanations and how to obtain your OpenAI API key to get you started!

How to Use the GPT-4o API Model

For this article, we will use Python code. To specify GPT-4o in your Python code, follow these steps:

1. Install the OpenAI Python Package

First, you need to install the OpenAI Python package. Open your terminal and run:

pip install openai2. Set Up Your Environment

Next, set up your environment by importing the necessary libraries and setting your API key. This is crucial for authenticating your requests to OpenAI. The only thing you need to adjust here is to replace "your_api_key_here", with your own unique API key.

from openai import OpenAI

import os

# Set the API key and model name

MODEL = "gpt-4o"

client = OpenAI(api_key=os.environ.get("OPENAI_API_KEY", "your_api_key_here"))3. Create a Completion Request to Interact with the Model

Now, create a completion request to have an interaction with the model. This involves specifying the model, providing context with a system message, and crafting a system and user prompt.

completion = client.chat.completions.create(

model=MODEL,

messages=[

{"role": "system", "content": "You are an economics expert. You provide detailed and clear explanations on economic concepts. Assist the user in understanding specific economic scenarios. Please use a professional and educational tone. The output should be a detailed and clear explanation in Markdown format."},

{"role": "user", "content": "Hello! Could you explain how a shift in the demand curve affects equilibrium price and quantity."} ] )

print("Assistant: " + completion.choices[0].message.content)To get a good output from ChatGPT, it is important to write a good prompt. Remember, Garbage in = Garbage out. Fortunately, you can use our format for your prompts!

Assistant: Sure, I can help with that! It’s essential to grasp both the concepts of the demand curve and the equilibrium point to understand this properly.

### Understanding the Demand Curve

The **demand curve** represents the relationship between the quantity of a good or service that consumers are willing to purchase and the good's price, holding other factors constant. It typically slopes downward from left to right, indicating that as the price decreases, the quantity demanded increases, and vice versa.

### Equilibrium Price and Quantity

....

Image Processing with the GPT-4o API

A critical reader will notice that this doesn’t add new features yet, although it is faster and cheaper. But the real value that GPT-4o adds lies in its understanding of images. Images are made available to the model in two main ways: by passing a link to the image or by passing the Base64 encoded image directly in the request. Images can be passed in the user, system, and assistant messages.

Base 64 Encoded files convert binary data (like images or documents) into text. This makes it easier to share and store files, especially in text-based systems like emails or web pages. Examples include .jpg for images, .png for graphics, .pdf for documents, and .mp4 for videos.

Base 64 encoded images

If you have an image or set of images locally, you can pass those to the model in Base 64 encoded format. No worries, below you will find a template code that will convert your image file to a Base 64 format. The only things you need to do are:

- Specify the file path to your image.

- Set your API key.

- Create a prompt for the model.

Everything else can be left as is!

from IPython.display import Image, display, Audio, Markdown

import base64

from openai import OpenAI

import os

# Specify the path to your image file here

IMAGE_PATH = "/path/to/your/image.png"

# Set the API key and model name

MODEL = "gpt-4o"

client = OpenAI(api_key=os.environ.get("OPENAI_API_KEY", "your_api_key_here"))

# Function to encode the image file as a base64 string

def encode_image(image_path):

with open(image_path, "rb") as image_file:

return base64.b64encode(image_file.read()).decode("utf-8")

# Encode the image specified in IMAGE_PATH

base64_image = encode_image(IMAGE_PATH)

# Create a completion request to interact with the model

response = client.chat.completions.create(

model=MODEL,

messages=[

{"role": "system", "content": "You are a helpful assistant that responds in Markdown."},

{"role": "user", "content": [

{"type": "text", "text": "Could you explain this model?"},

{"type": "image_url", "image_url": {

"url": f"data:image/png;base64,{base64_image}"}

}

]}

],

)

# Print the assistant's response

print("Assistant: " + response.choices[0].message.content)

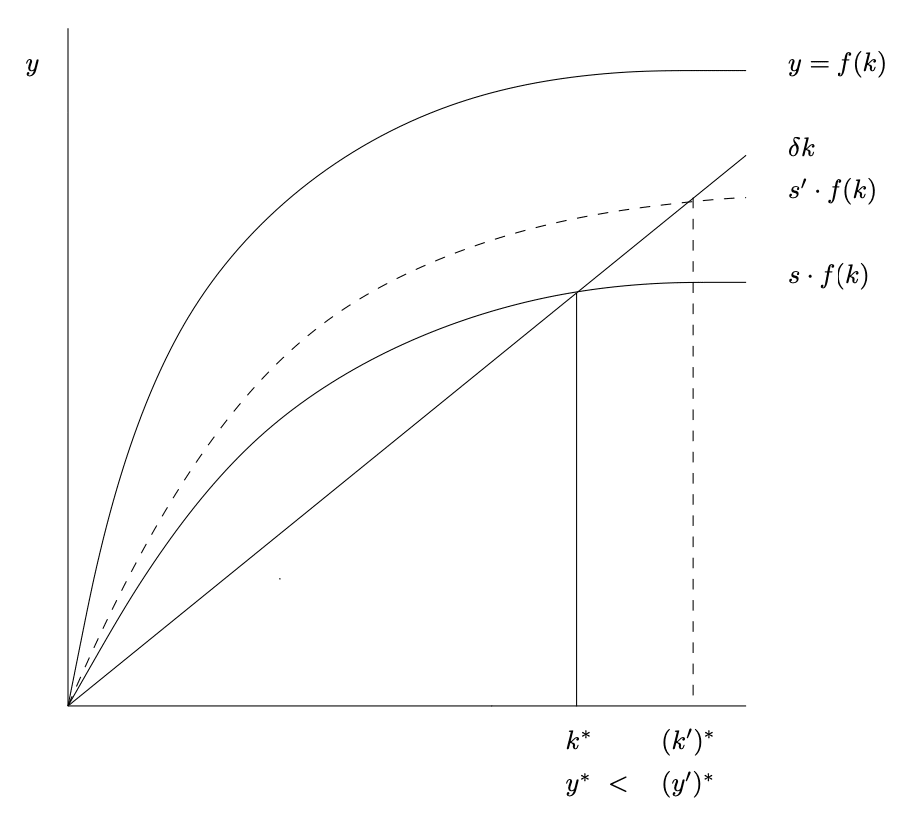

Assistant: This graph appears to illustrate the Solow-Swan model, which is a standard model in economics for understanding long-term economic growth. The model focuses on the relationship between capital accumulation, labor or population growth, and technological progress. Here's a breakdown of the elements in the graph:

...

For writing the code, we uploaded the image above that is familiar to those who took a macroeconomics course. GPT-4o was smart enough to identify it as the Solow-Swan model and then explain it! We hadn’t given any hints in the image title or the prompt.

Using URLs for Image Processing

In addition to uploading images, which is a handy tool while studying, you can also directly specify images from the internet! So, if you’re searching the internet and don’t understand something, just paste the link into the code and let it explain it to you!

Example Case: Econometric Visualization

Econometric interpretations always require a high degree of care. For this example, let’s see how GPT-4o ihandles it. We specify a link to a particular visualization and ask for an interpretation:

from openai import OpenAI

import os

# Set the API key and model name

MODEL = "gpt-4o"

client = OpenAI(api_key=os.environ.get("OPENAI_API_KEY", "your_api_key_here"))

response = client.chat.completions.create(

model=MODEL,

messages=[

{"role": "system", "content": "Act as a Statistics professor. Your are entitled to explain statistics in a clear and straighforward manner. Yet you are a perfectionist, so your answer must alswasy be coorrect. You will always respond in Markdown."},

{"role": "user", "content": [

{"type": "text", "text": "Could you explain this graph to me?"},

{"type": "image_url", "image_url": {

"url": "https://theeffectbook.net/the-effect_files/figure-html/differenceindifferences-dynamiceffect-1.png"}

}

]}

],

temperature=0.0,

)

print(response.choices[0].message.content)Certainly! The graphs you provided illustrate different approaches to estimating a Regression Discontinuity Design (RDD). RDD is a quasi-experimental pretest-posttest design that attempts to identify the causal effect of an intervention by assigning a cutoff or threshold above or below which an intervention is assigned.

...

Conclusion

What does this mean for your studies? Upload a photo of your study materials and let this powerful new model explain it to you. If you’re looking for additional material and you find a clear image to complement your study materials, add it and get an added explanation right away. Don’t understand something? Just ask for help with the model as you always did!