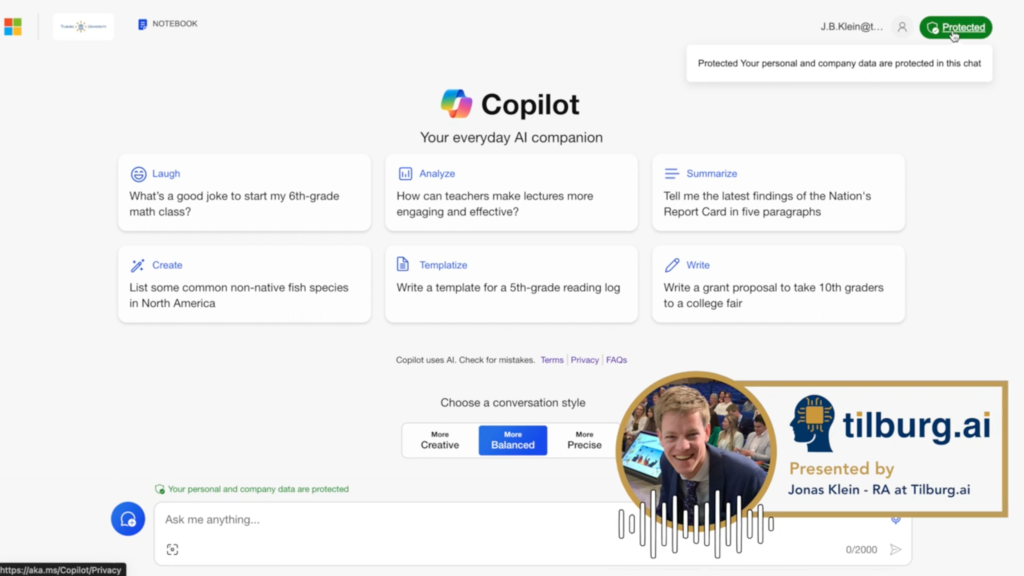

Prompt Engineering Course

Learn how to communicate or “talk” with AI models, particularly ChatGPT.

Perfect for educators who want to integrate AI into their classrooms, students eager to quickly master ChatGPT, or AI enthusiasts.

AI Tool Advisor

Learn to Use GenAI in Your Studies

Students interested in generative AI (GenAI), take note: educate yourself on how ChatGPT and GenAI work and how they can be used responsibly in education with this e-module. Discover practical ways to incorporate GenAI tools into your study routine!

Learn How GenAI Can Impact Your Teaching!

Are you a lecturer or teaching assistant eager to deepen your understanding of generative AI (GenAI)? Learn how to use these tools responsibly and explore the potential impacts they may have on your teaching!