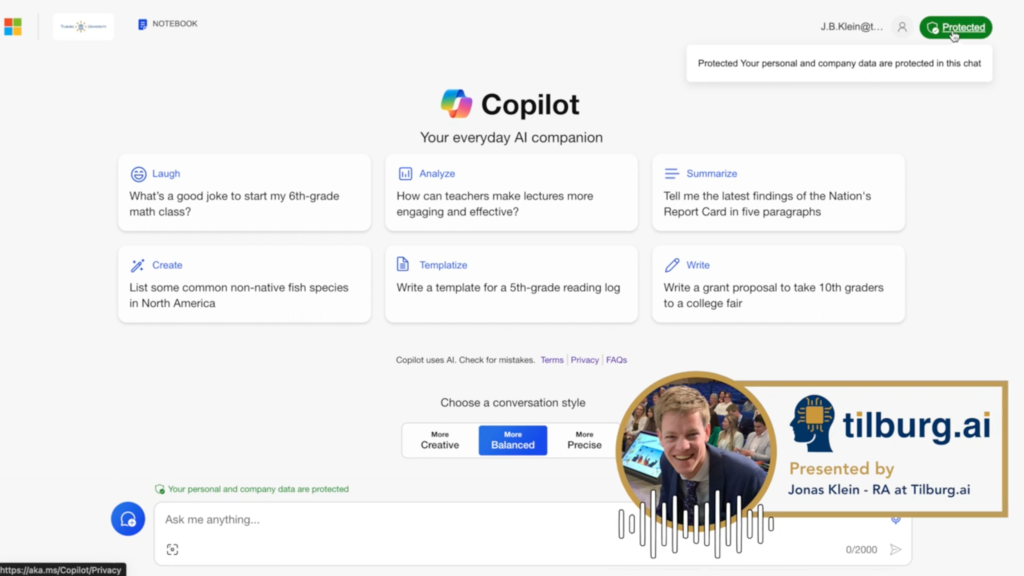

We have created a chatbot platform where teachers and employees can build chatbots and share them with students or colleagues. By uploading materials, you decide what the chatbot knows, and every answer refers back to the source.

Everyone at Tilburg University can log in with their university account to get started.

Happy building!